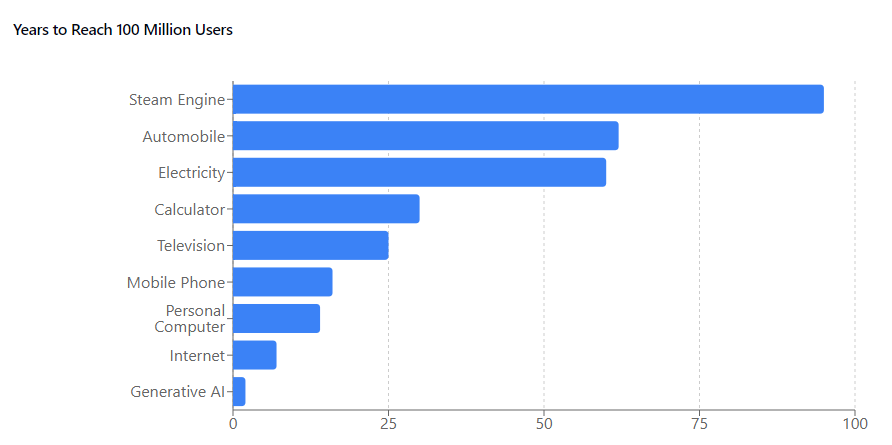

Gen AI advancement speed faster than previous major tech

Reaching 100 million users faster than Internet, Mobile Phones, Television

Credit: Generated by Anthropic Claude Sonnet 3.5

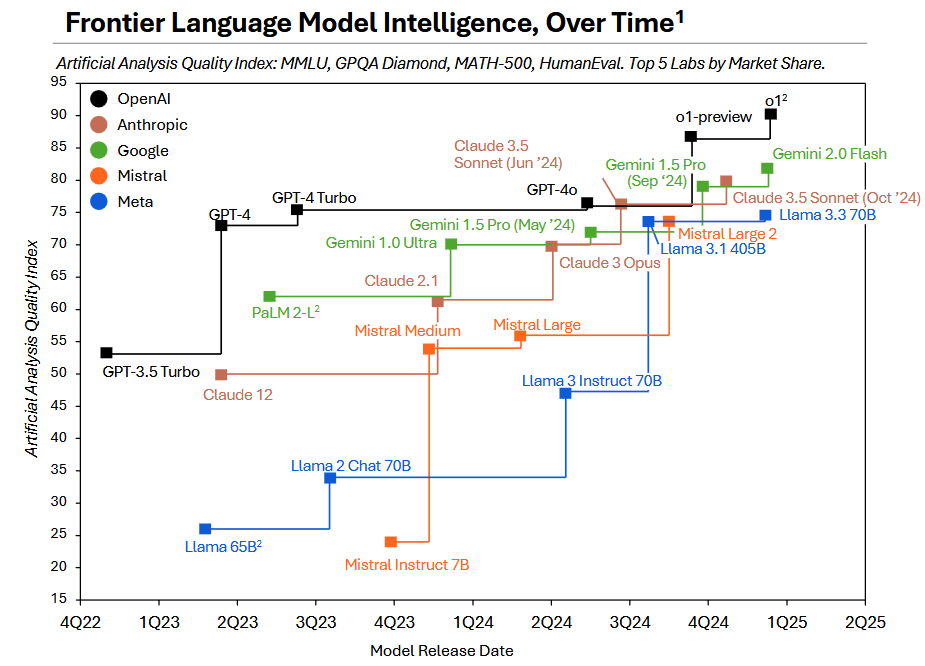

Credit: Arificial Analysis AI Review 2024

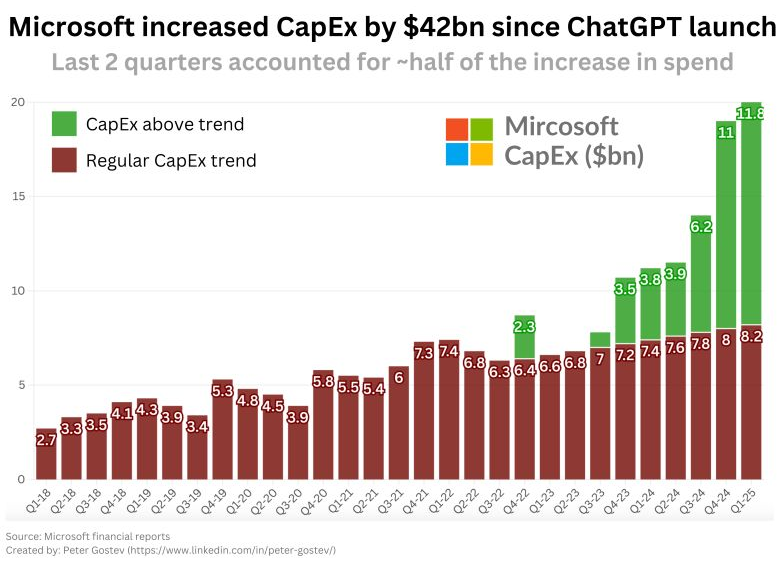

Credit: Peter Gostev, LinkedIn

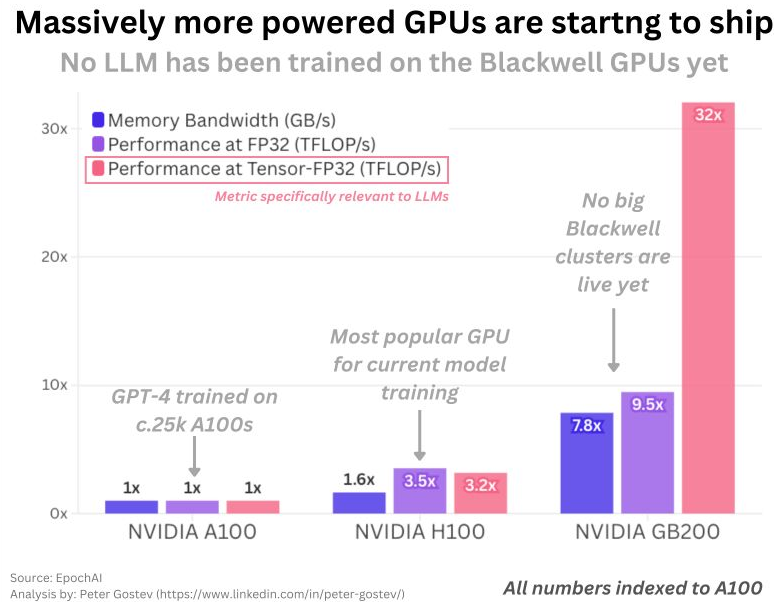

Credit: Peter Gostev, Linked In

One of the most impressive aspects of the new generative AI tools like ChatGPT has been their ability to understand the world and generate relevant and useful and accurate text, audio, images and video. I use numerous of these tools in my daily work and it multiplies my productive output on a wide range of tasks.

But perhaps equally as impressive is the speed at which their adoption is happening, relative to other major technology advances in human history.

I asked Anthropic Claude Sonnet 3.5 to generate this first chart for me, which illustrates how many years it took a new technology to reach its first 100 million users. The steam engine was nearly 100 years, television 25 years and the internet just over 6 years. But generative AI has only taken 2 years an adoption continues to spread very quickly.

This advancement speed adds to society's challenge

For those not watching AI closely, you may think that the field is just making a lot of noise and waving hands around how great AI is. But behind the scenes, the quantity and quality of these tools continues to advance at an astounding rate.

Check out the second chart at the left, showing the capabilities of various Large Language Models (LLMs) over time. Notice how much they have advanced as a group in the two years since ChatGPT 3.5 Turbo launched. And notice how often the lead has changed regarding which model is fastest. Open AI, Google, Anthropic and Meta have been in a tight race, and more entrants are coming all the time.

Researchers, large companies, governments and startups are deploying these tools in increasingly innovative ways. You can expect to be interacting with AI, whether you know it or not, more and more in your daily life.

While the opportunity for societal advances and productivity boosts is huge, it also leaves us little time, as a civilization, to grapple with safely and responsibly deploying these tools throughout our lives.

No signs of slowing down

If you are wondering if the hype and progress have plateaued... it hasn't. Startups, venture capital firms, and large enterprises alike are making massive investments to continue building bigger, better and faster generative AI tools.

For example, Microsoft is now investing more in them than in all of their other infrastructure spending combined, which amounts to over $42 billion just in the last two years. Half of that was in the second half of 2024 alone. Other large players are also investing many billions of dollars.

Additionally, NVIDIA - the chip maker fueling much of this capability - just started to ship their latest Blackwell GPU. Independent benchmarks show it is 32 times faster than its predecessor the A100, which is less than 5 years old.

These are the primary chips that these LLMs are "trained" upon, so as they get deployed into data centers, you can expect the capabilities of the LLMs they produce to continue advancing at a significant rate for years to come.

What does this all mean?

As the pundit Ethan Mollick likes to note, even if generative AI capabilities froze today, we would still have years or decades to implement their incredible capabilities into the way that we work, live and play.

But in fact we can expect yet more dizzying advances to be announced almost weekly. My best advice is to stay tuned in, learn how you can use these tools in your own work and personal lives, and get ready for more civilization-level advances to come.